Research Subdomains with SemRush

1

Have you noticed a rise in content being pushed to and created on sub-domains of authoritative websites?

A strategy born out of Google’s Panda update (and subsequent iterations) has been to move specific content to its own sub-domain as a way of getting around being algorithmically viewed as a content farm. There are a few reasons for this and it is most effective when the sub-domain hangs off of an existing domain which has a fair amount of existing authority and trust.

Google initially treats sub-domains as somewhat separate sites, compared to the root domain, and over time most of the authority gained by the sub-domain can be passed back to the root, or pages on the root, via internal linking and vice versa.

Another point of interest with this approach is that penalties generally do not flow down to the root domain from the sub-domain so one can be a bit more aggressive on the marketing of the sub-domain and not worry so much about perceived lower quality stuff on the sub-domain taking down the entire root domain.

Bringing in SemRush

SemRush has consistently produced the best results for me, over time, with organic SEO data. Their interface is incredibly slick and has undergone frequent updates as the product has matured. Their updates are solid as well and they don’t try to give you all sorts of useless custom metrics that cloud the interpretation of the relatively clean data they provide.

SemRush groups an entire domain (subs and the root) when you search the core domain. At present, it is not possible to just search the sub-domain and the sub-domain data is only available to paying subscribers.

What I like about the domain grouping is 3-fold:

- You can uncover sub-domains you might have not already known about

- They have generous export limits and multiple pricing tiers so you can get all the keywords (sub-domain and root domain) you need on a site.

- You can cross compare rankings from root domain and sub-domains to get a sense of the overall strategy of the site in question

Rarely have I run across a research project where I couldn’t get all the data I needed. They have plans that allow for the exporting of anywhere between 10,000 and 100,000 keywords as well as giving you 3,000 to 10,000 requests per day (tons of data).

If you have a really large project outside of the above-mentioned limits, you can request a custom data report from them as well.

Hunting Down Targets

Some of the best targets will be found by simply following trusted news sources to uncover these kinds of strategies. In the linked to article you’ll see a self-professed Panda recovery from Hubpages via the “moving content to sub-domain” route.

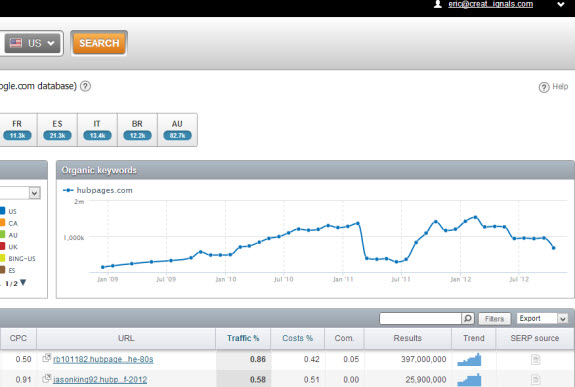

As you can see from this chart there was a huge drop post-Panda and then a subsequent recovery for a period of time and then a somewhat noticeable slowdown but it certainly appears as if their strategy worked for awhile (definitely long enough for them to have the opportunity to right the ship or figure out a new approach or what have you)

Some other ways to check on prospects for research would be to cobble together various Panda “Winners and Loser” lists. You do not necessarily need to target just winners either. Remember, the goal is to uncover keywords for your campaign(s).

Researching a site that made these kinds of changes and benefited would be a benefit for me, not a necessity. Plus, some of these lists might be taking into account the success of the root domain itself, not necessarily new sub-domains in addition to the pure root domain.

In addition to all that, you can gather both keyword and content ideas from either winning or losing sites (in those lists). The keywords and content are likely still worthy to pursue but the execution might have been poor on a losing site or that losing site might have got hit by another, somewhat unrelated algorithmic update (Penguin, for example).

Here some of the initial lists:

- SeoBook’s Initial Analysis

- 1st Round from Search Metrics

- Late 2011 List from SearchEngineLand

- 1st Quarter 2012 SearchEngineLand

Using SemRush

So let’s take HubPages as an example. What they did (mostly) was to take their author pages and move them to sub-domains. Topical and category-level pages remain off the root domain while they’ve taken the unique author articles and published them to their own sub-domains off of the root hubpages.com

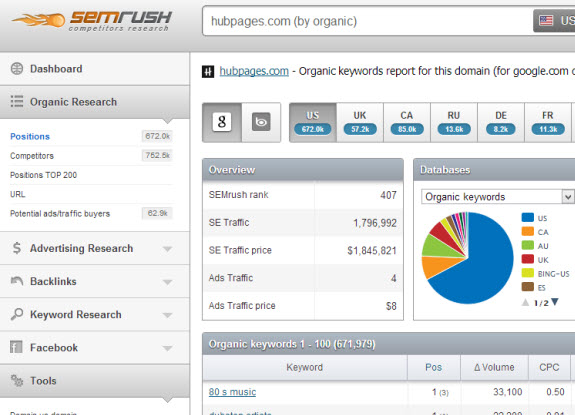

SemRush also incorporates Bing (as you’ll see in the image below) so you can utilize that data as well but for the purposes of this post we’ll be focusing on Google in the US (note they also have many countries available too).

For the sake of image clarity the single screenshot of the initial results page is broken up into the left page (1st image) and right page (2nd image) so you can clearly see all of the data and options:

So, initially, we are looking at over 670,000 keywords! Well, chances are you really don’t need all that data. Fortunately SemRush has some killer filtering available pre-export. Your options are:

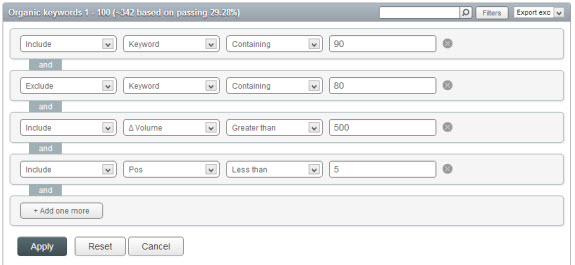

- Include/Exclude chosen parameters (see following bullet points)

- Keyword, URL with options for containing, exactly matching, beings with, ends with

- Current ranking position, CPC with options for equal to, less than, greater than

- Traffic %, Cost %, Competition, & Total Results with options for equal to, less than, greater than

As you can see, these make for powerful filtering options since you can combine them. There really is no limit on filters you can add as many filters as you want! These filtering options make it super-easy to find specific keyword targets (or a specific author in the case of Hubpages)

Hubpages covers a ton of topics so you might be just interested in a few.

Let’s assume you dig 90’s music and want to start a site about that. Simply click on the Filters button below the organic keyword chart and start adding in filters:

Here, I’ve included keywords that contain the word 90, have a volume of greater than 500 searches per month in Google, excluded 80 in case any of those snuck in, and asked that only keywords where Hubpages ranks less than 5 are shown (I want to see their most important keywords).

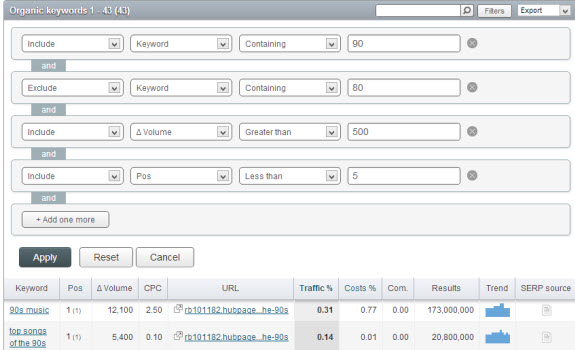

Now we are left with only 43 keywords out of 670k+ and the beauty is that when we export we are given the filtered data.

Hitting Sub-Domains

You might have guessed this already but now we can dive right into sub-domain research.

We can clear out all these filters and show only the sub-domain of this specific author. You might already know the sub-domain you want to research, if so you can considerably cut down your research time using these powerful filters.

If I wanted to dive more into this specific sub-domain all I would have to do is copy the sub-domain and add it as a filter (now I have 30k+ results off of this sub-domain only).

This is why SemRush is my tool of choice for sub-domain research. You can take any sized site and quickly whittle down to the specific product, author, topic, etc that you need or want to research.

Give SemRush a try today, it really is one of the best tools on the market.

Research Subdomains with SemRush,

5 Responses to Research Subdomains with SemRush